HoloLens for Computer Vision in Unity

Published:

Sample highlights

HoloLensForCV-Unity is a sample project which incorporates the HoloLensForCV sample from Microsoft into a C# project in Unity using IL2CPP Windows Runtime Support.

Windows Runtime Support in Unity

The project enables access to all HoloLens research mode streams through Unity C# code using the HoloLensForCV Windows Metadata file.

To enable Windows Runtime Support, the ENABLE_WINMD_SUPPORT #define directive is used to check that the project has runtime support enabled. Any call to a custom .winmd Windows API must be enclosed within this #define directive.

// C# Unity

// Enclose code within this #define directive

#if ENABLE_WINMD_SUPPORT

// WinMD file

using HoloLensForCV;

#endif

#if ENABLE_WINMD_SUPPORT

public class MyClass : MonoBehaviour

{

// Instance of Windows API class SensorFrameStreamer

private SensorFrameStreamer _sensorFrameStreamerPv;

}

#endif

Windows Runtime Support makes it far easier to integrate other libraries into Universal Windows Projects than it was using dynamic linked libraries (DLLs). Microsoft has some detailed documentation on implementing Windows Runtime components in C++. Below is a simple example.

Runtime Support Example

C++ sample

In Visual Studio, create a new C++ WindowsRT component with:

// .header file

// ref class definition

public ref class MyRuntimeClass sealed

{

public:

// Constructor

MyRuntimeClass();

// Method to check if initialized

bool IsClassInitialized();

private:

bool _isRuntimeInitialized = false;

};

// .cpp file

MyRuntimeClass::MyRuntimeClass()

{

_isRuntimeInitialized = true;

}

bool MyRuntimeClass::IsClassInitialized()

{

return _isRuntimeInitialized;

};

Build the simple C++ sample and copy all output files to the Assets->Plugins->x86/x64 folder.

Unity C# sample

It is now easy to access the above C++ WinRT component in Unity C# through IL2CPP build support. Open C# Unity scripts in Visual Studio, and be sure to select the Assembly-CSharp.Player viewing option for code, otherwise ENABLE_WINMD_SUPPORT code in the #define directive with appear greyed out.

// C# Unity

// Class derives from Mono Behaviour

public class RuntimeSampleUnity : MonoBehaviour

{

#if ENABLE_WINMD_SUPPORT

private MyRuntimeClass _myRuntimeClass;

#endif

// Default MonoBehaviour method

void Start()

{

#if ENABLE_WINMD_SUPPORT

// New instance of runtime class

_myRuntimeClass = new MyRuntimeClass();

// Check to see if we initialized C++ runtime component

var isInit = _myRuntimeClass.IsClassInitialized();

Debug.LogFormat("MyRuntimeClass: {0}", isInit);

#endif

}

}

Build the Unity project with the runtime sample script attached to a game object and deploy to a UWP device. The WinRT component should be accessible through C# Unity environment, though not through the Unity Editor window.

HoloLensForCV-Unity sample

As mentioned, this sample enables access to the HoloLens research mode streams, as well as OpenCV to enable real-time processing of sensor frames streamed from the Microsoft HoloLens device in a C# Unity environment using IL2CPP Windows Runtime Support. Here is a link to the project on my personal Github repo.

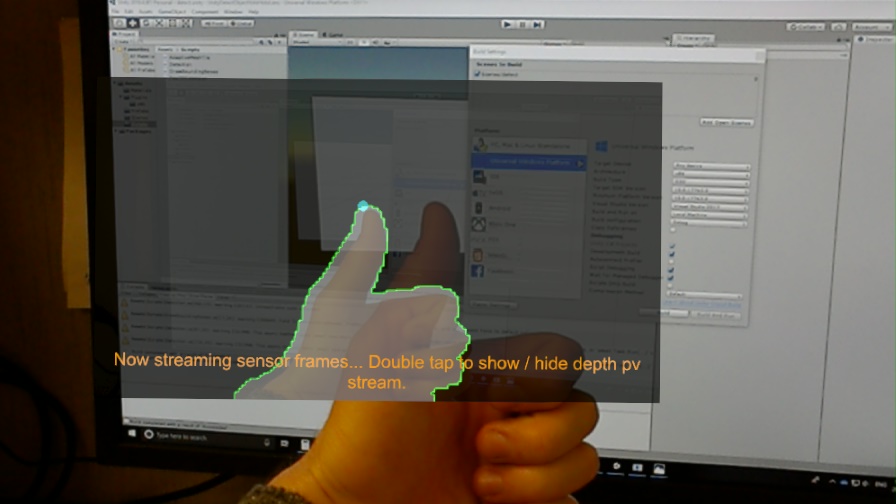

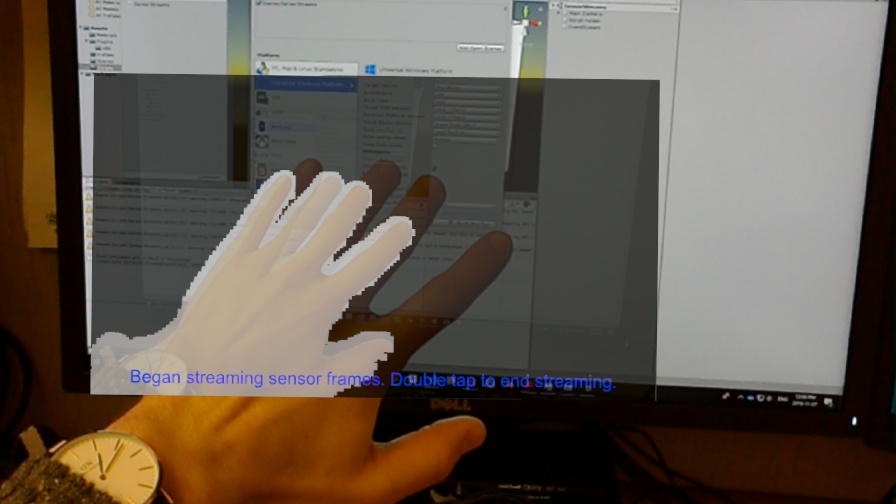

The included sample includes a Unity project which maps the short-throw time of flight (ToF) depth stream to the PV camera stream.

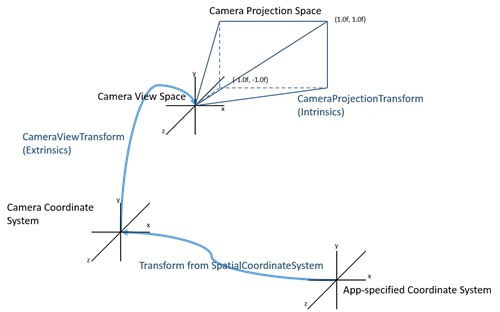

To map the depth frames to PV frames we require the following 4 x 4 transformation matrices:

- Depth camera: frame to origin transform (frameToOriginDepth)

- Depth camera: camera to view transform (cameraToViewDepth)

- PV camera: frame to origin transform (frameToOriginPV)

- PV camera: camera view transform (cameraToViewPV)

- PV camera: camera projection transform (cameraToProjectionPV)

The below image provides some more detail into how these camera transforms interact.

The mapping follows a 2D -> 3D -> 3D -> 2D set of transformations, resulting in 2D fused depth-PV frames.

Compute inverse transforms to relate depth frames to PV frame coordinate mapping:

viewToCameraDepth = cameraToViewDepth^-1

originToFramePV = frameToOriginPV^-1

Depth to PV 4 x 4 transformation matrix and chain of transformations. We first map the 2D depth frames from the view to camera space, then from the depth frame to its origin. From the origin, we map to the PV camera frame, PV camera frame to view space, then from the PV view space to PV projection space.

depthToPV = viewToCameraDepth * frameToOriginDepth * originToFramePV * cameraToViewPV * cameraToProjectionPV

Compute the mapping of depth points and scale the 2D points by the final coordinate of the vector.

depthPvPoint = depthPoint * depthToPV

depthPvPoint = depthPvPoint / depthPvPoint[-1]

Now we scale the depth pv points to the size of the incoming camera frames.

xDepthPvPoint = pvFrameWidth * (depthPvPoint[0] + 1) / 2

yDepthPvPoint = pvFrameHeight * (1 - ((depthPvPoint[1].y + 1) / 2))

And finally can visualize the output on a canvas in Unity!

The image at the beginning of this post also contains some simple computer vision processing to display contours in depth PV frames using OpenCV through the included Nuget package. Please see my Github repo for more details and sample code.